Artificial Intelligence: A Double-Edged Sword for Criminal Justice

Artificial intelligence (AI) is rapidly transforming nearly every industry, from healthcare to transportation. AI refers to computer systems that can perform tasks normally requiring human intelligence, such as visual perception, speech recognition, and decision-making. With massive datasets and advanced algorithms, AI can analyze information and make predictions faster and more accurately than humans.

While AI holds great promise, it also poses risks, especially regarding bias and lack of transparency. As AI systems make ever more consequential decisions – from approving loans to making medical diagnoses – ethical challenges abound. This is particularly true in the criminal justice system, where AI is being deployed in pre-trial, sentencing, and parole decisions.

*Featured image generated using Midjourney AI to keep things interesting.

AI In Criminal Justice

On the one hand, AI could help make the criminal justice system more fair and effective. For example, algorithmic risk assessments are now frequently used to recommend bail and sentencing terms. These standardized tools are intended to avoid the biases that humans often demonstrate. However, critics argue the algorithms themselves may discriminate against minorities and other groups, by learning from historical data that reflects past prejudices.

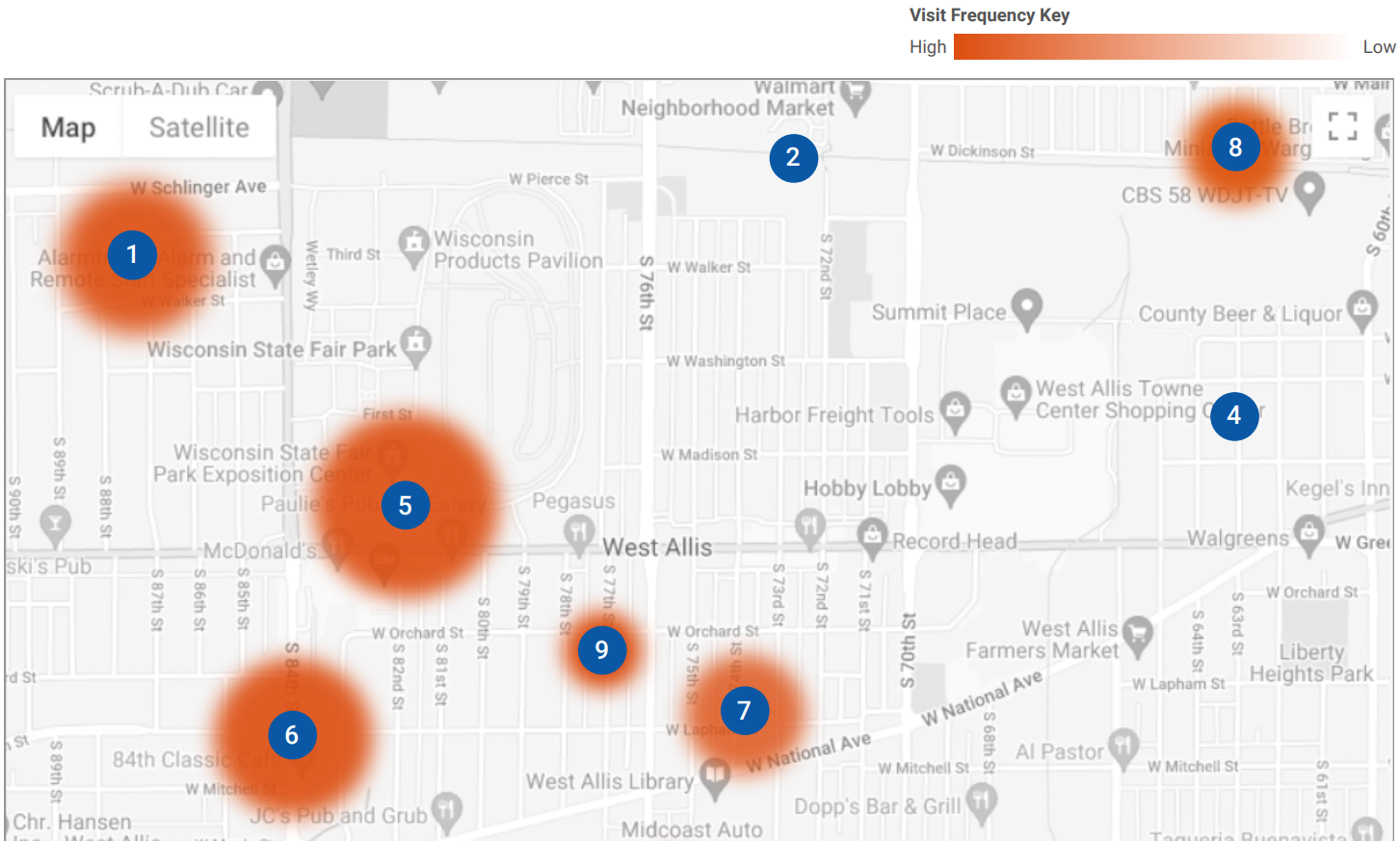

AI is also increasingly used for predictive policing – analyzing crime data to forecast areas and individuals most likely to offend. While backers say this allows better allocation of resources, opponents argue it unfairly targets and criminalizes communities based on patterns rather than evidence. Facial recognition AI, deployed to aid investigations and surveillance, has also faced criticism for misidentifying people of color at higher rates.

The main concerns regarding AI in criminal justice are lack of transparency, potential bias, and misplaced reliance on machines over human discretion. AI systems are often proprietary “black boxes”, obscuring how they produce recommendations. Even experts struggle to explain the reasoning behind some advanced algorithms, like neural networks.

Once AI becomes entrenched in the system, it may be challenging to dislodge biases or correct unfair outcomes. If the computer says to deny parole, that may carry undue weight regardless of the underlying accuracy. For these reasons, many argue AI at most should inform – not replace – human decision-makers in criminal justice. Oversight, audits, and understanding AI's limitations are crucial.

The Risks and Potential of AI in Community Corrections

These AI debates take on another dimension in the context of community corrections. Supervising and reintegrating offenders on probation or parole presents distinct challenges. Could emerging AI technologies enhance public safety and rehabilitation – or further marginalize vulnerable individuals?

In community corrections, AI is primarily used for risk assessment and monitoring. Some agencies now use AI-powered apps and GPS devices that predict a person's likelihood of recidivating or violating parole conditions. These trade traditional face-to-face meetings for continuous data-driven insights and intervention.

Proponents argue 24/7 monitoring allows tighter control over high-risk caseloads. AI could also anticipate problems – like drug or alcohol use – and trigger preventive responses. This might improve outcomes and allow probation officers to focus their personal attention on the hardest cases. Machine learning applied to large datasets may also better identify the factors most predictive of success versus failure.

However, AI-guided monitoring relies on vast collection of personal data – locations, conversations, habits – that raises ethics issues. Particular concern arises when algorithms infer mental states – emotions, substance abuse, dangerousness – based on superficial signals. Even with disclaimers about being an aid and not a substitute for human judgment, in practice AI assessments may still unduly shape decisions about restrictions, sanctions or revocation.

The Right Mix: AI Paired With Human Judgment

The path forward likely involves judicious use of AI as one tool among many, while reserving final judgment for human officers with contextual knowledge of each case. With thoughtful design and oversight, AI may enhance community corrections by synthesizing more information more quickly. But it must not impose technological determinism about people’s potential. Probation and parole put people’s freedoms in the state’s hands; that power and trust must remain under human control.